Introduction

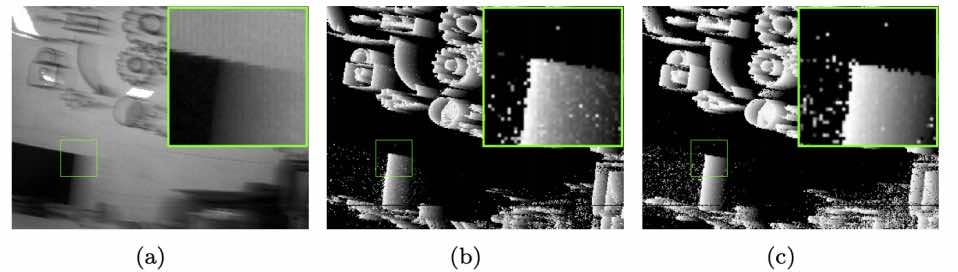

Many event-based motion deblurring methods have been proposed by learning from synthesized dataset composed of simulated events and blurry images as well as sequences of sharp clear ground-truth images. However, the inconsistency between synthetic and real data degrades the performance of inference on real-world event cameras. The physical intrinsic noise of event cameras raises the difficulty of simulating labeled events that highly match the real event data. Even though the event simulator to some extent reduces the gap by considering the pixel-to-pixel variation in the event threshold, additional noise effects such as background activity noise and false negatives still exist, leading to tremendous discrepancy between the virtual events synthesized from event simulators and the real events emitted by event cameras. An alternative approach is to build a labeled dataset composed of real-world events accompanying with synthesized blurry images, and then train networks on it. Unfortunately, obtaining such pairs is not always easy, which needs to be captured with a slow motion speed as well as under good lighting conditions to avoid motion blur. Subsequent blurry image synthesis and alignment on temporal domain is also tedious but indispensable. Furthermore, inconsistency still exists between the events associated with synthesized and real-world motion blur in that limited read-out bandwidth leads to more event timing variations, as shown in Fig. 1.

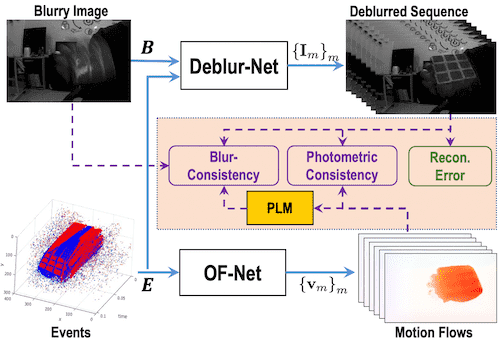

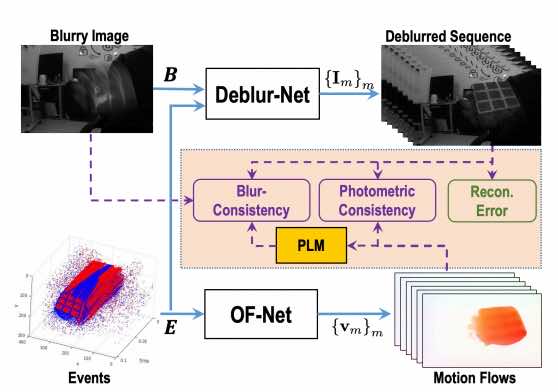

In this paper, we propose a novel framework of learning the event-based motion deblurring network in a self-supervised manner, where real-world events and real-world motion blurred images are exploited to alleviate the performance degradation caused by data inconsistency and bridge the gap between simulations and real-world scenario. Fig. 2 illustrates the overall architecture of our framework.

Results

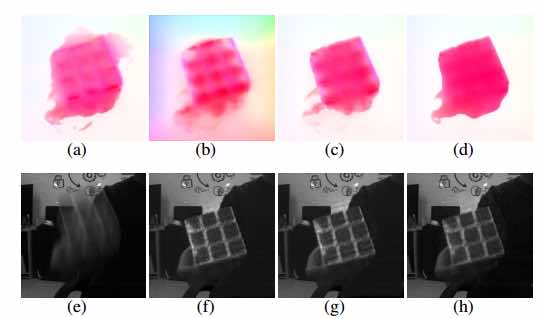

1. Results of Optical Flow

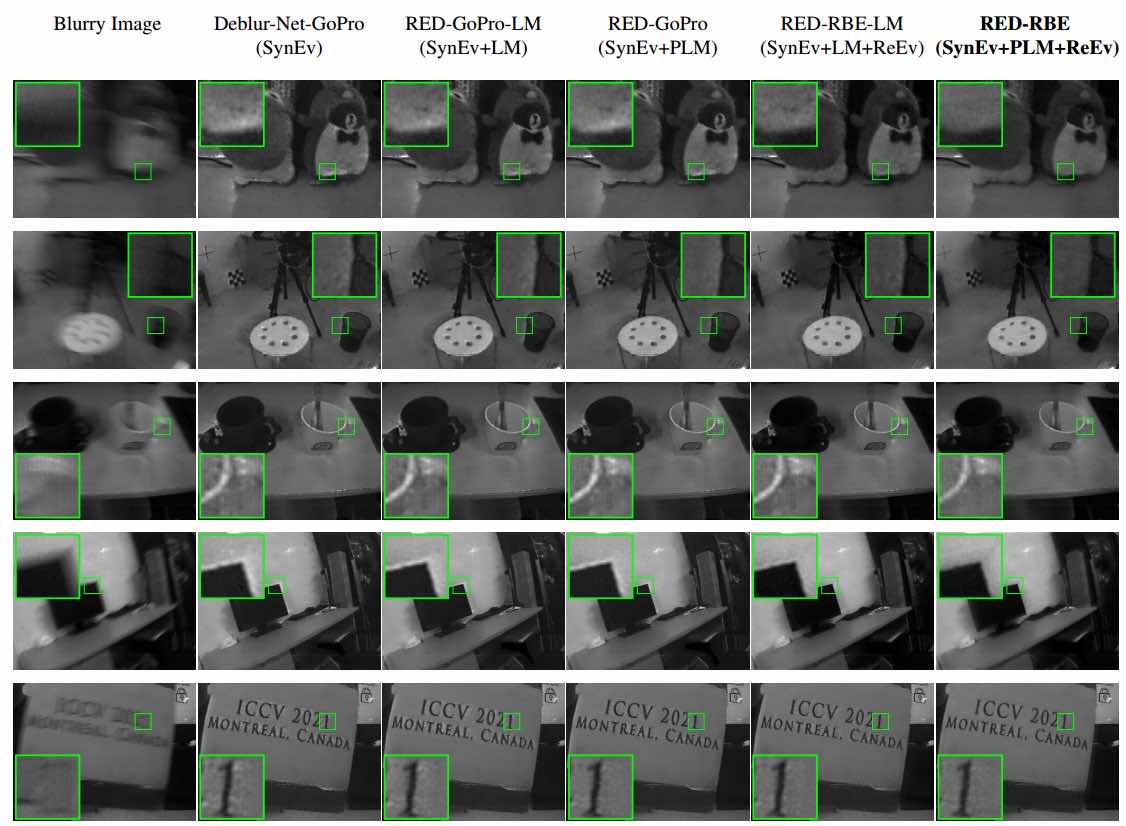

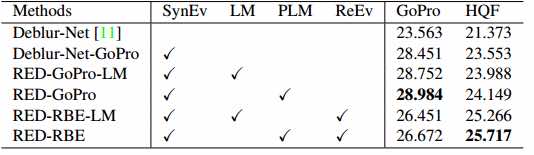

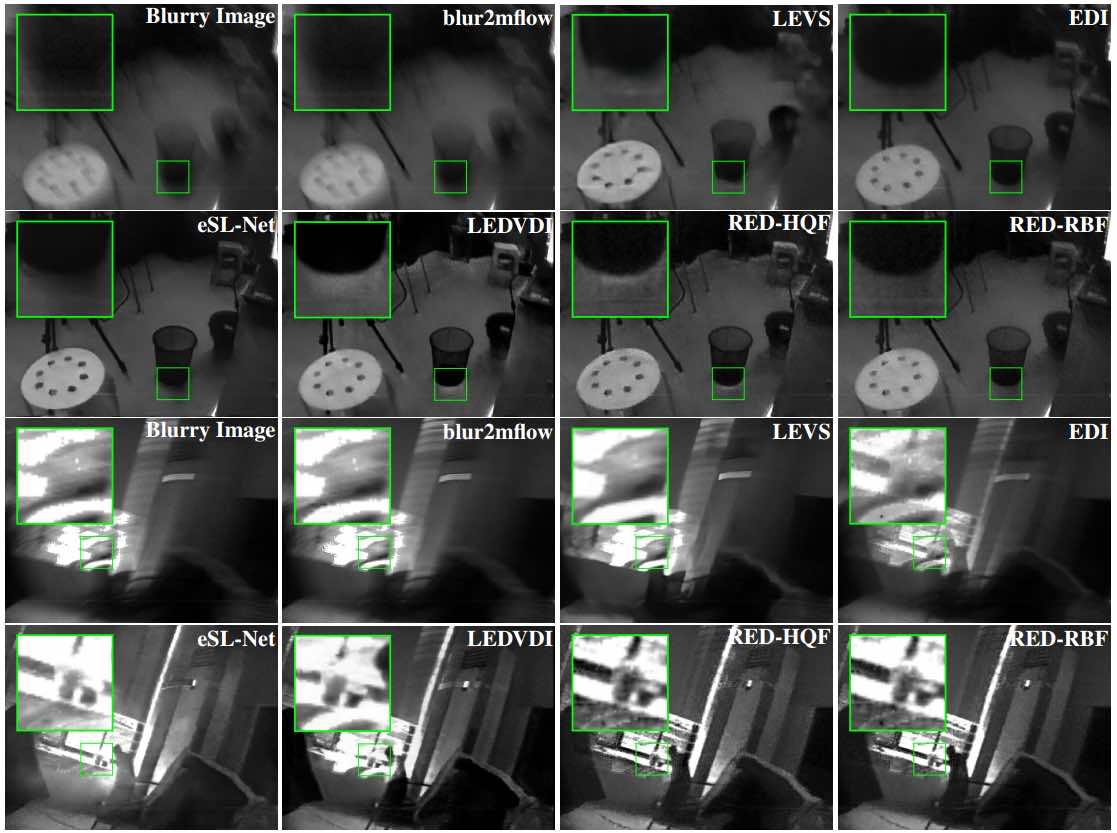

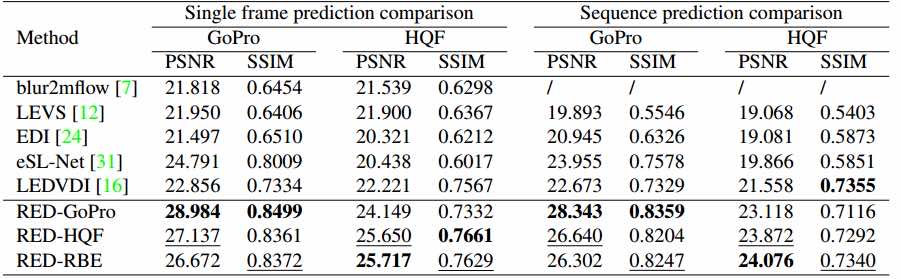

2. Comparisons with State-of-the-art Methods

3. Ablation Study